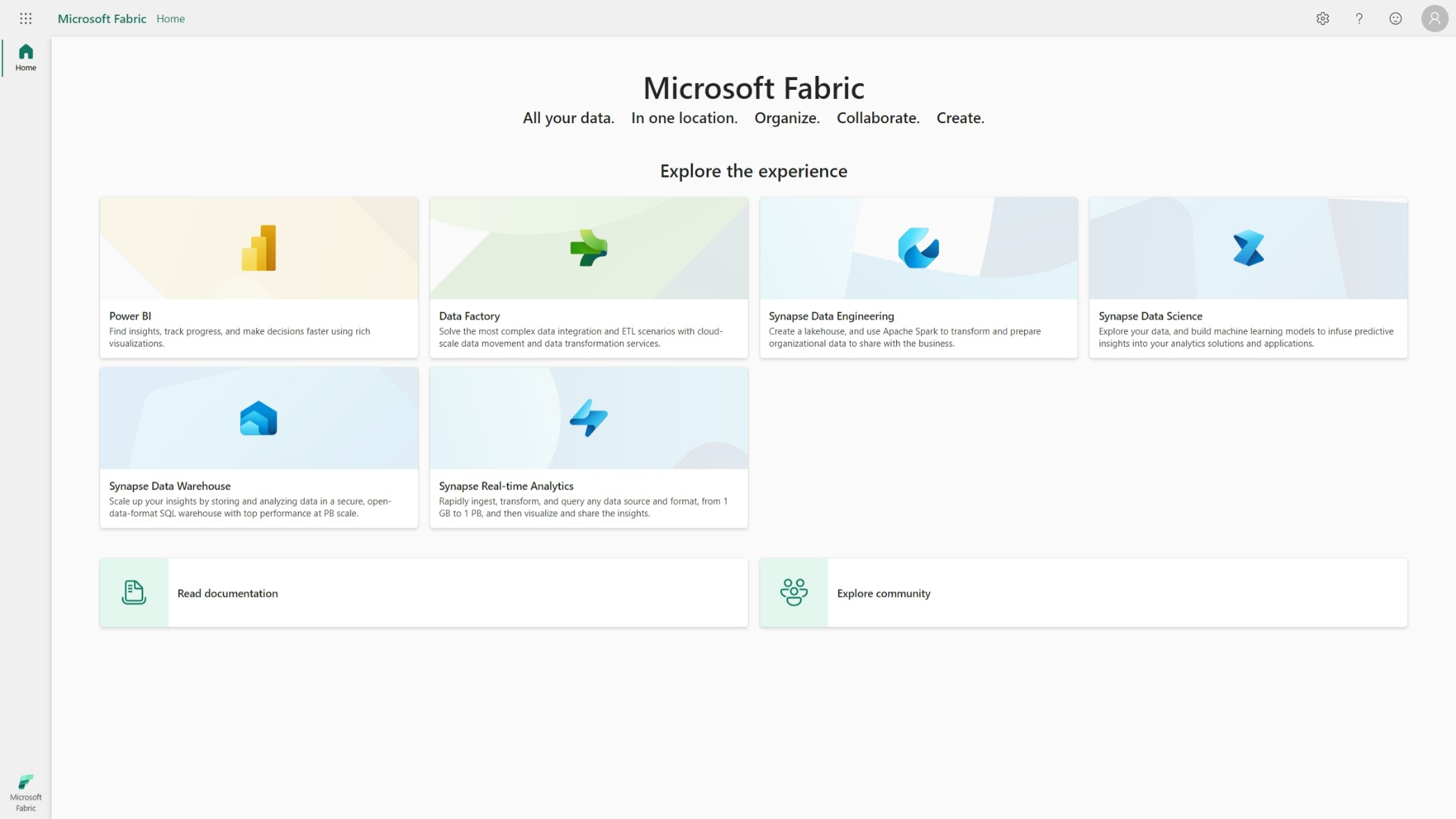

Connect to Fabric Lakehouses & Warehouses from Python code

In this post, I will show you how to connect to your Microsoft Fabric Lakehouses and Warehouses from Python.

Packages & dependencies

To connect to Fabric, we’ll use the Microsoft ODBC Driver. This driver is available for Windows, Linux, and macOS. Click on your operating system to download and install the driver:

Next, we’ll need a Python package to connect using ODBC and a Python package to authenticate with Azure Active Directory. We can install them like so:

1pip install pyodbc azure-identity

Authentication

Next, we have to decide how you want to authenticate. Fabric relies on Azure Active Directory for authentication. While in the future it will be possible to use fancy mechanisms like Service Principals and Managed Identities, for now, you can only authenticate as yourself. This still leaves us with many authentication options on the table:

- Using your login session from the Azure CLI

- Using your login session from the Azure Developer CLI

- Using your login session from Azure PowerShell

- Using your login session from Visual Studio Code

- Opening a browser to authenticate

All of the options above use an external factor to authenticate, which makes our code quite simple. For example, if you want to use your Azure CLI login session, you can use the following code:

1from azure.identity import AzureCliCredential

2

3credential = AzureCliCredential()

The code is similar for the other options. If you want to use a browser to authenticate, you can use the following code:

1from azure.identity import InteractiveBrowserCredential

2

3credential = InteractiveBrowserCredential()

Building the connection string

Now that we have our authentication mechanism in place, we can build the connection string. The connection string is a standard ODBC connection string and for Fabric, it looks like this:

1sql_endpoint = "" # copy and paste the SQL endpoint from any of the Lakehouses or Warehouses in your Fabric Workspace

2database = "" # copy and paste the name of the Lakehouse or Warehouse you want to connect to

3

4connection_string = f"Driver={{ODBC Driver 18 for SQL Server}};Server={sql_endpoint},1433;Database=f{database};Encrypt=Yes;TrustServerCertificate=No"

Building the connection

To build the connection, we have to first use our credentials from above to retrieve an access token we can pass to Fabric. This is all very technical, but you can follow along with the comments in the code below:

1import struct

2from itertools import chain, repeat

3import pyodbc

4

5

6# prepare the access token

7

8token_object = credential.get_token("https://database.windows.net//.default") # Retrieve an access token valid to connect to SQL databases

9token_as_bytes = bytes(token.token, "UTF-8") # Convert the token to a UTF-8 byte string

10encoded_bytes = bytes(chain.from_iterable(zip(value, repeat(0)))) # Encode the bytes to a Windows byte string

11token_bytes = struct.pack("<i", len(encoded_bytes)) + encoded_bytes # Package the token into a bytes object

12attrs_before = {1256: token_bytes} # Attribute pointing to SQL_COPT_SS_ACCESS_TOKEN to pass access token to the driver

13

14

15# build the connection

16

17connection = pyodbc.connect(connection_string, attrs_before=attrs_before)

Now we can use the connection to run SQL queries:

1cursor = connection.cursor()

2cursor.execute("SELECT * FROM sys.tables")

3rows = cursor.fetchall()

4print(rows) # this will print all the tables available in the lakehouse or warehouse

Make sure to close the cursor and the connection when you’re done:

1cursor.close()

2connection.close()

The whole API to query databases from Python is documented in PEP 249

. More pyodbc documentation is available in the project’s wiki

.

Putting it all together

Below you can find the complete example, merging all the steps from above:

1import struct

2from itertools import chain, repeat

3

4import pyodbc

5from azure.identity import AzureCliCredential

6

7credential = AzureCliCredential() # use your authentication mechanism of choice

8sql_endpoint = "" # copy and paste the SQL endpoint from any of the Lakehouses or Warehouses in your Fabric Workspace

9database = "" # copy and paste the name of the Lakehouse or Warehouse you want to connect to

10

11connection_string = f"Driver={{ODBC Driver 18 for SQL Server}};Server={sql_endpoint},1433;Database=f{database};Encrypt=Yes;TrustServerCertificate=No"

12

13token_object = credential.get_token("https://database.windows.net//.default") # Retrieve an access token valid to connect to SQL databases

14token_as_bytes = bytes(token.token, "UTF-8") # Convert the token to a UTF-8 byte string

15encoded_bytes = bytes(chain.from_iterable(zip(value, repeat(0)))) # Encode the bytes to a Windows byte string

16token_bytes = struct.pack("<i", len(encoded_bytes)) + encoded_bytes # Package the token into a bytes object

17attrs_before = {1256: token_bytes} # Attribute pointing to SQL_COPT_SS_ACCESS_TOKEN to pass access token to the driver

18

19connection = pyodbc.connect(connection_string, attrs_before=attrs_before)

20cursor = connection.cursor()

21cursor.execute("SELECT * FROM sys.tables")

22rows = cursor.fetchall()

23print(rows)

24

25cursor.close()

26connection.close()

You might also like

If you liked this article, follow me on LinkedIn or other social media to stay up-to-date with my latest posts. You might also like the following 2 posts about related topics:

Welcome to the 3rd generation: SQL in Microsoft Fabric

While typing this blog post, I’m flying back from the Data Platform Next Step conference where I gave a talk about using dbt with Microsoft Fabric . DP Next Step was the first conference focussed on Microsoft data services right after the announcement of Microsoft Fabric so a lot of speakers were Microsoft employees and most of the talks had some Fabric content. Fabric Fabric Fabric, what is it all about? In this post I’ll go deeper into what it is, why you should care and focus specifically on the SQL aspect of Fabric.

Preparing a migration to Microsoft Fabric: from Azure Synapse Serverless SQL

If all those posts about Microsoft Fabric have made you curious, you might want to consider it as your next data platform. Since it is very new, not all features are available yet and most are still in preview. You could already adopt it, but if you want to deploy this to a production scenario, you’ll want to wait a bit longer. In the meantime, you can already start preparing for the migration. Let’s dive into the paths to migrate to Microsoft Fabric. Today: starting from Synapse Serverless SQL Pools.