data warehouse

Fabric: Lakehouse or Data Warehouse?

There are 2 kinds of companies currently active in the Microsoft data space: those who are migrating to Microsoft Fabric, and those who will soon be planning their migration to Microsoft Fabric. 😅 One question that often comes back is Should I focus on the Lakehouse or the Data Warehouse? Let’s answer that in this post. I can already tell you this: you’re asking the wrong question 😉

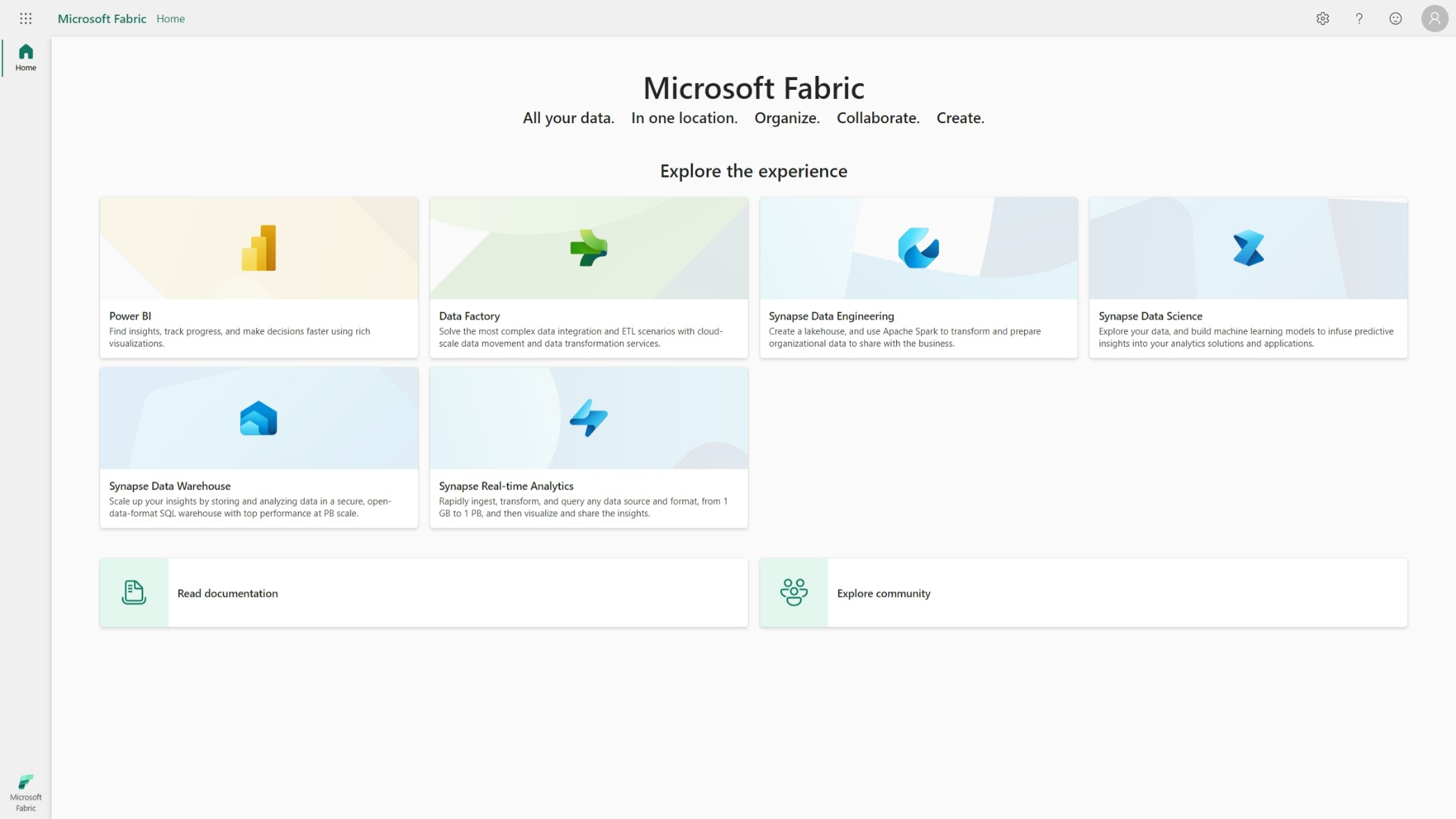

Is Microsoft Fabric just a rebranding?

It’s a question I see popping up every now and then. Is Microsoft Fabric just a rebranding of existing Azure services like Synapse, Data Factory, Event Hub, Stream Analytics, etc.? Is it something more? Or is it something entirely new? I hate clickbait titles as much as you do. So, before we dive in, let me answer the question right away. No, Fabric is not just a rebranding. I would not even describe Fabric as an evolution (as Microsoft often does), but rather as a revolution! Now, let’s find out why.

My take-aways from Big Data London: Delta Lake & the open lakehouses

Last week I attended Big Data London. Both days were filled with interesting sessions, mostly focussing on one of the vendors also exhibiting at the conference. There are 2 things I am taking away from this conference: Delta Lake has won the data format wars, and your next data platform is either Snowflake, either an open Lakehouse.

Migrating Azure Synapse Dedicated SQL to Microsoft Fabric

If all those posts about Microsoft Fabric have made you excited, you might want to consider it as your next data platform. Since it is very new, not all features are available yet and most are still in preview. You could already adopt it, but if you want to deploy this to a production scenario, you’ll want to wait a bit longer. In the meantime, you can already start preparing for the migration. Let’s dive into the steps to migrate to Microsoft Fabric. Today: starting from Synapse Dedicated SQL Pools.

Connect to Fabric Lakehouses & Warehouses from Python code

In this post, I will show you how to connect to your Microsoft Fabric Lakehouses and Warehouses from Python.

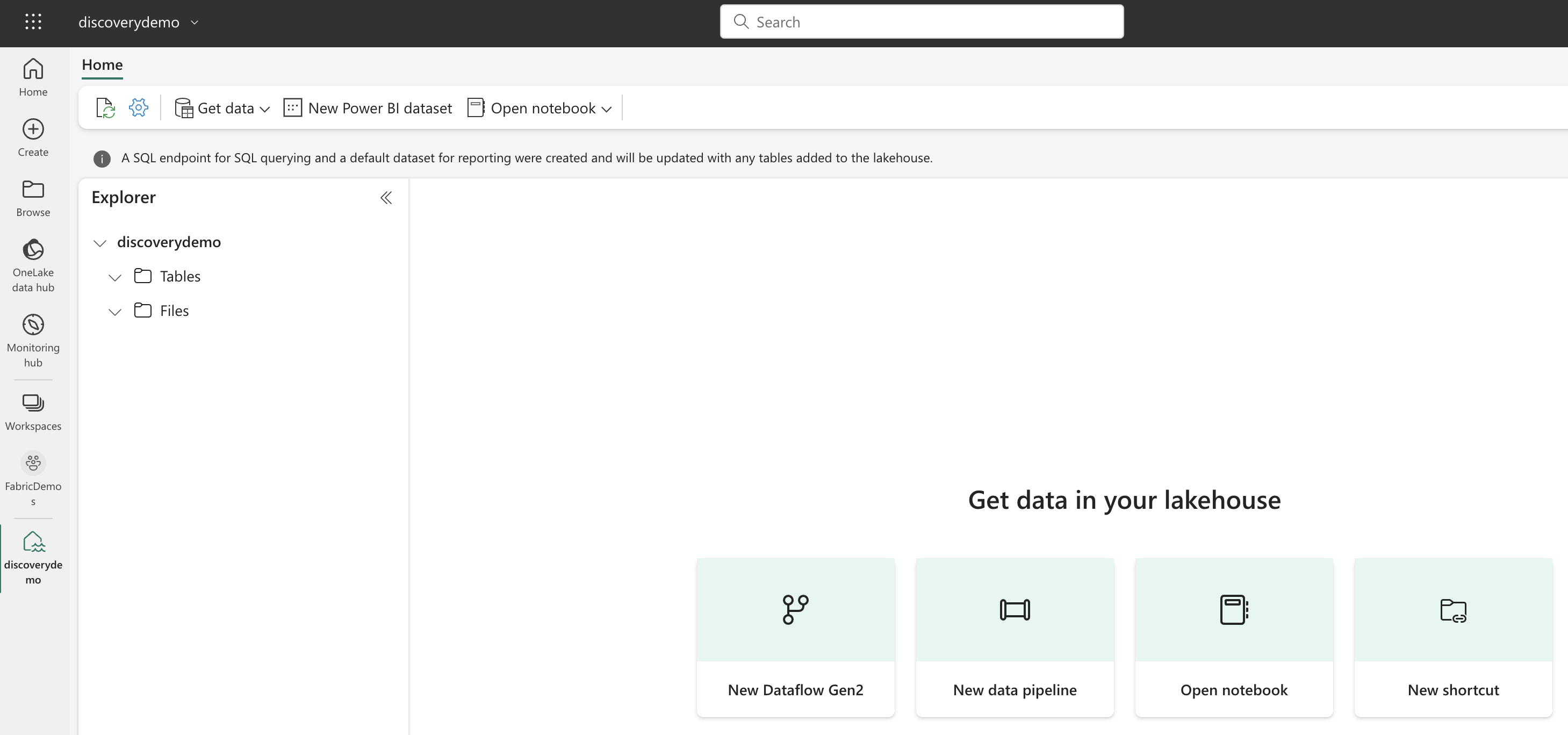

Microsoft Fabric's Auto Discovery: a closer look

In previous posts , I dug deeper into Microsoft Fabric’s SQL-based features and we even explored OneLake using Azure Storage Explorer . In this post, I’ll take a closer look at Fabric’s auto-discovery feature using Shortcuts. Auto-discovery, what’s that? Fabric’s Lakehouses can automatically discover all the datasets already present in your data lake and expose these as tables in Lakehouses (and Warehouses). Cool, right? At the time of writing, there is a single condition: the tables must be stored in the Delta Lake format. Let’s take a closer look.

Welcome to the 3rd generation: SQL in Microsoft Fabric

While typing this blog post, I’m flying back from the Data Platform Next Step conference where I gave a talk about using dbt with Microsoft Fabric . DP Next Step was the first conference focussed on Microsoft data services right after the announcement of Microsoft Fabric so a lot of speakers were Microsoft employees and most of the talks had some Fabric content. Fabric Fabric Fabric, what is it all about? In this post I’ll go deeper into what it is, why you should care and focus specifically on the SQL aspect of Fabric.