Apache Spark

My take-aways from Big Data London: Delta Lake & the open lakehouses

Last week I attended Big Data London. Both days were filled with interesting sessions, mostly focussing on one of the vendors also exhibiting at the conference. There are 2 things I am taking away from this conference: Delta Lake has won the data format wars, and your next data platform is either Snowflake, either an open Lakehouse.

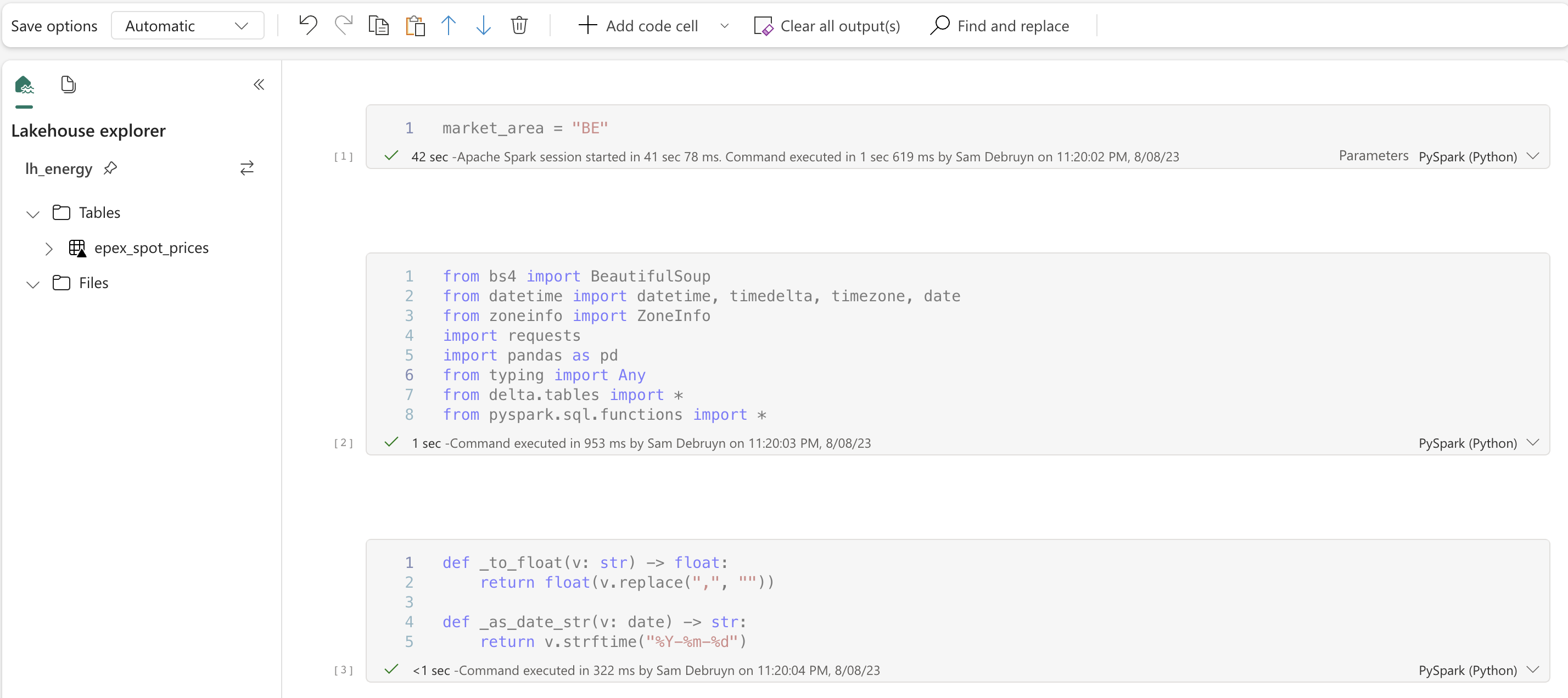

Fabric end-to-end use case: Data Engineering part 1 - Spark and Pandas in Notebooks

Welcome to the second part of a 5-part series on an end-to-end use case for Microsoft Fabric. This post will focus on the data engineering part of the use case. In this series, we will explore how to use Microsoft Fabric to ingest, transform, and analyze data using a real-world use case.

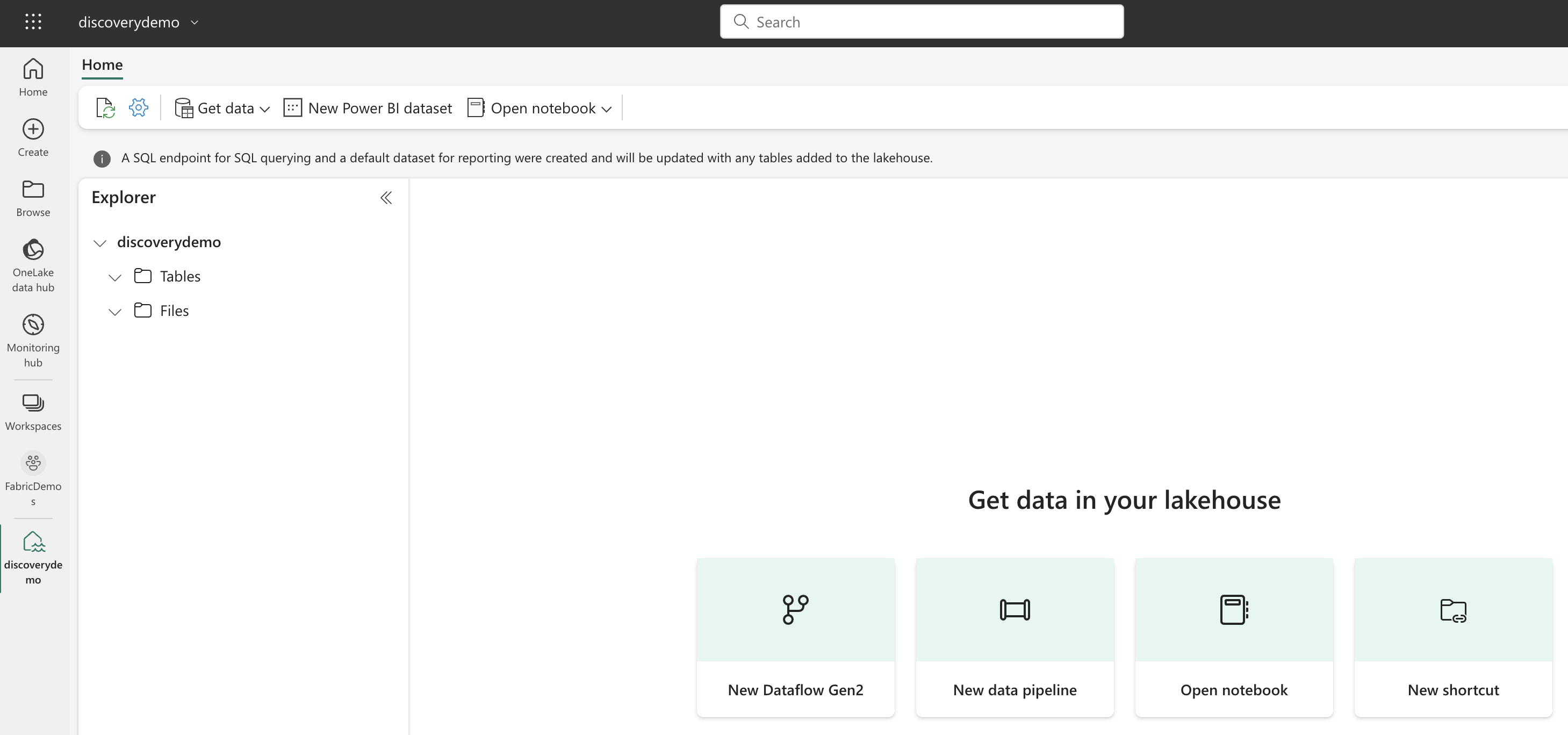

Microsoft Fabric's Auto Discovery: a closer look

In previous posts , I dug deeper into Microsoft Fabric’s SQL-based features and we even explored OneLake using Azure Storage Explorer . In this post, I’ll take a closer look at Fabric’s auto-discovery feature using Shortcuts. Auto-discovery, what’s that? Fabric’s Lakehouses can automatically discover all the datasets already present in your data lake and expose these as tables in Lakehouses (and Warehouses). Cool, right? At the time of writing, there is a single condition: the tables must be stored in the Delta Lake format. Let’s take a closer look.

Exploring OneLake with Microsoft Azure Storage Explorer

Recap: OneLake & Delta Lake One of the coolest things about Microsoft Fabric is that it nicely decouples storage and compute and it is very transparent about the storage: everything ends up in the OneLake. This is a huge advantage over other data platforms since you don’t have to worry about moving data around, it is always available, wherever you need it.