Fabric: Lakehouse or Data Warehouse?

There are 2 kinds of companies currently active in the Microsoft data space: those who are migrating to Microsoft Fabric, and those who will soon be planning their migration to Microsoft Fabric. 😅 One question that often comes back is

Should I focus on the Lakehouse or the Data Warehouse?

Let’s answer that in this post. I can already tell you this: you’re asking the wrong question 😉

In previous posts like this one , I already went a bit deeper in what these 2 things are and why they exist. To recap that a bit: they are both interfaces to your data in OneLake, built on the same Polaris engine. Although, they are not the same thing and you shouldn’t ignore one or the other. A successful Fabric implementation will use both.

The question then changes to how and when you use them. But more interestingly, let’s also look at how you can combine them to get the best of both worlds.

The Data Warehouse: SQL

I don’t want to sound pedantic, but the Data Warehouse in Fabric, is actually a Data Lakehouse. A typical lakehouse would serve as a transactional SQL engine on top of a data lake and that is exactly what Fabric’s Data Warehouse is. It exposes your data in OneLake as a T-SQL interface. And it does so in a very seamless way, so that you probably wouldn’t even notice that you’re not working with a traditional SQL Server database.

A lakehouse is a new, open architecture that combines the best elements of data lakes and data warehouses. Lakehouses are enabled by a new system design: implementing similar data structures and data management features to those in a data warehouse directly on top of low cost cloud storage in open formats.

Source: Databricks, 2020

A data lakehouse can be defined as a modern data platform built from a combination of a data lake and a data warehouse. More specifically, a data lakehouse takes the flexible storage of unstructured data from a data lake and the management features and tools from data warehouses, then strategically implements them together as a larger system. This integration of two unique tools brings the best of both worlds to users.

Source: Oracle

You can use the Data Warehouse to work with Petabytes of data with all the T-SQL tools you already know. One of my personal favourites that I helped to build myself is dbt . Microsoft is officially supporting this and I think it’s one of the best ways to work with the data in your Data Warehouse.

The Lakehouse: Python

Okay, so if the Data Warehouse is actually a lakehouse, then what is the Lakehouse in Fabric? In terms of T-SQL support it works in exactly the same way as your Data Warehouse, except for one big difference: it’s read-only. You cannot use statements like INSERT, DELETE, or CREATE TABLE AS SELECT. You can still write any SELECT query and create VIEWs. All of this is available through the SQL Endpoint which Fabric automatically provisions for every Lakehouse in your workspace.

But as you can see from the header above, the Lakehouse is not just a T-SQL interface, which is only available through the SQL Endpoint. The main interface to talk to the Lakehouse is the Spark runtime. This is an engine available in Python, Scala, Java, and R. You can use any of these programming languages to talk to your data. Popular data engineering frameworks like Apache Spark and Pandas are available out of the box. And you can install any other Python package you want. You can write typical Jupyter Notebooks or regular Python scripts or Spark jobs and run them on the Lakehouse.

Combining the 2

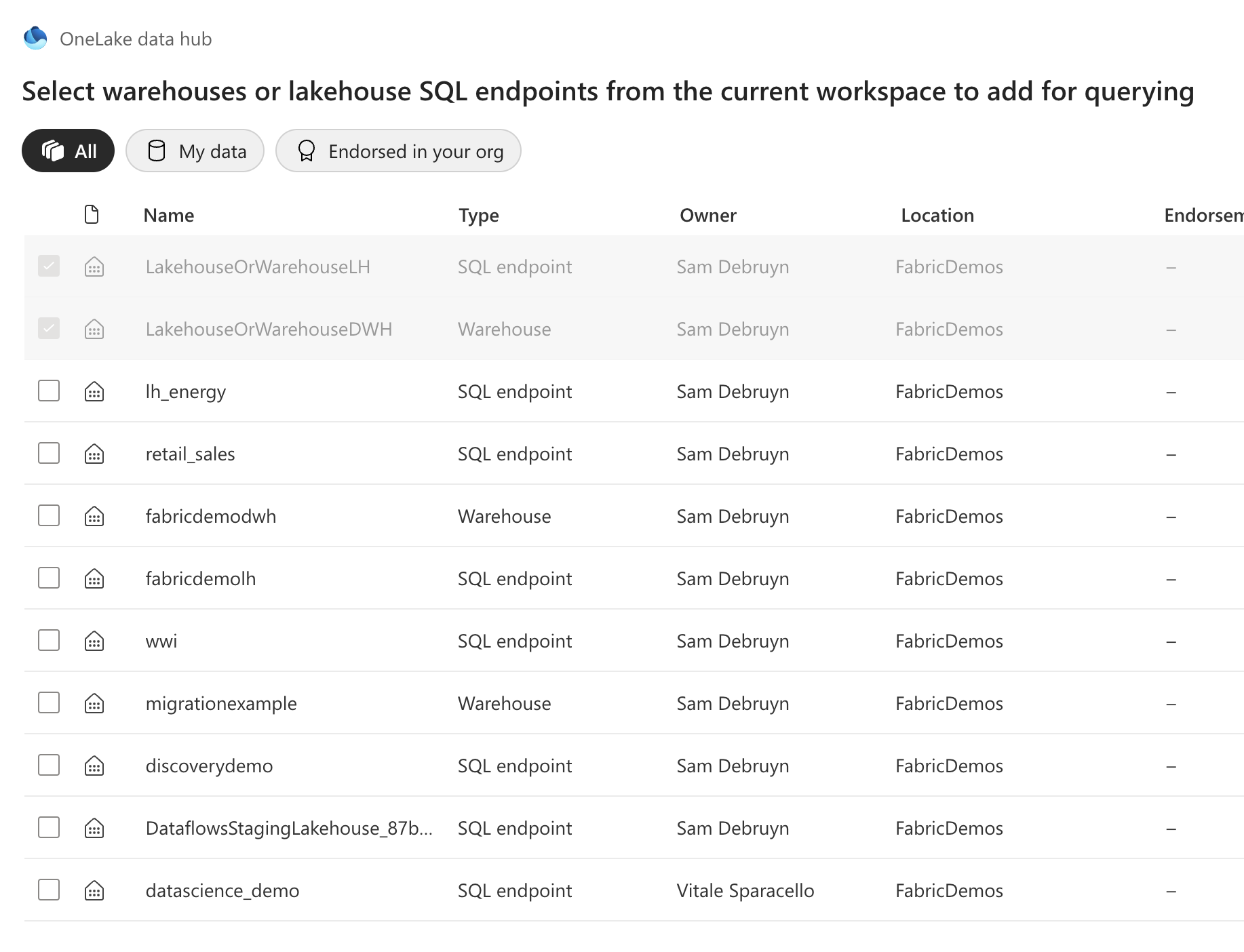

Since both experiences are available in T-SQL, this is the easiest way to combine data from both. In every Fabric Data Warehouse, you can link your Lakehouse to it and query data from both in a single query. You could have a dimension in your Data Warehouse and a facts table in your Lakehouse and join them in a single query.

You can link any Lakehouse or Data Warehouse to your Data Warehouse

1SELECT

2 SUM([SaleValue]), -- column in the Lakehouse

3 [CustomerType] -- column in the Data Warehouse

4FROM [MyLakehouse].[dbo].[FactSales] -- table in the Lakehouse

5INNER JOIN [dbo].[DimCustomer] -- table in the Data Warehouse

6ON [FactSales].[CustomerId] = [DimCustomer].[CustomerId]

7GROUP BY [CustomerType]

When to use what?

My personal recommendation is to follow the typical medallion architecture. You would ingest your raw data from your data sources into the Files part of your Lakehouse, forming the typical Bronze layer.

There, you could then use the typical data engineering frameworks to clean your data and store the typical Silver layer as Delta Tables in the Lakehouse.

From there, you can use the Data Warehouse to create the Gold layer. This is where you would create your dimensional model and expose it to your end users. You’d be using the common CTAS (Create Table As Select) statements to transform your data from the Silver layer into the Gold layer in the Data Warehouse

Typical medallion architecture on Fabric

Conclusion

There is no choice to make between the Lakehouse and the Data Warehouse. We’ve also discussed how the Data Warehouse in Fabric is actually a Lakehouse. If you’re only using one of the 2, you’re probably missing out on some features that would make your life easier. The Data Warehouse is a great way to transform and serve data in SQL, which is the lingua franca of data teams in general. The Lakehouse is a great way to ingest and transform data in Python, which is what most data engineers have come to known and love. A successful strategy will use both.

You might also like

If you liked this article, follow me on LinkedIn or other social media to stay up-to-date with my latest posts. You might also like the following 2 posts about related topics:

Is Microsoft Fabric just a rebranding?

It’s a question I see popping up every now and then. Is Microsoft Fabric just a rebranding of existing Azure services like Synapse, Data Factory, Event Hub, Stream Analytics, etc.? Is it something more? Or is it something entirely new? I hate clickbait titles as much as you do. So, before we dive in, let me answer the question right away. No, Fabric is not just a rebranding. I would not even describe Fabric as an evolution (as Microsoft often does), but rather as a revolution! Now, let’s find out why.

Data Agility Unleashed: A Deep Dive into Microsoft Fabric's Features (dataroots Fabric Event)

I was invited to speak at the dataroots Microsoft Fabric Event in Leuven, Belgium. The event was held at De Hoorn, an old brewery in the city center. Speakers: Sam Debruyn (tech lead data & cloud @ dataroots) Jonas Tundo (CEO @ dataroots) Priya Sathy (Partner Director of Products @ Microsoft) Pictures: